文章目录

文档说明基于shardingsphere的分表实现方案算法方案比对组件依赖版本测试库表结构基于哈希取模分片说明shardingsphere.yml配置

基于自定义一致性哈希算法取模分片说明自定义算法实现shardingsphere.yml配置

基于时间取模分片说明shardingsphere.yml配置

功能验证点基于orderId hash分片写入-验证通过基于createShardTime 时间分片写入-验证通过验证跨表条件数据变更-验证通过验证跨表条件数据变更(按时间窗口或状态跨表更新)-验证通过验证跨表列表查询(条件:状态/时间区间)-验证通过验证跨表分页查询(条件:状态/时间区间)-验证通过验证跨表条件数据删除(按状态/时间窗口)-验证通过通过id查询数据(跨表)-验证通过验证通过分表字段查询数据-验证通过验证自定义SQL(XML)查询自动分表-验证通过验证自定义SQL(XML) 连表查询(关联表不分表)-验证通过多表分表写入+查询-验证通过

文档说明

本文档基于shardingsphere-jdbc-core-spring-boot-starter 5.2.1版本验证分表能力,同时基于实际的业务场景对比几类分表策略和实现方案

分库和分表是两个截然不同的功能,分表只要我们在Springboot中引入shardingsphere-jdbc这个依赖库即可,但是分库就要单独部署一个服务shardingsphere-proxy,其他服务连接shardingsphere-proxy,从而实现分库的功能

基于shardingsphere的分表实现方案

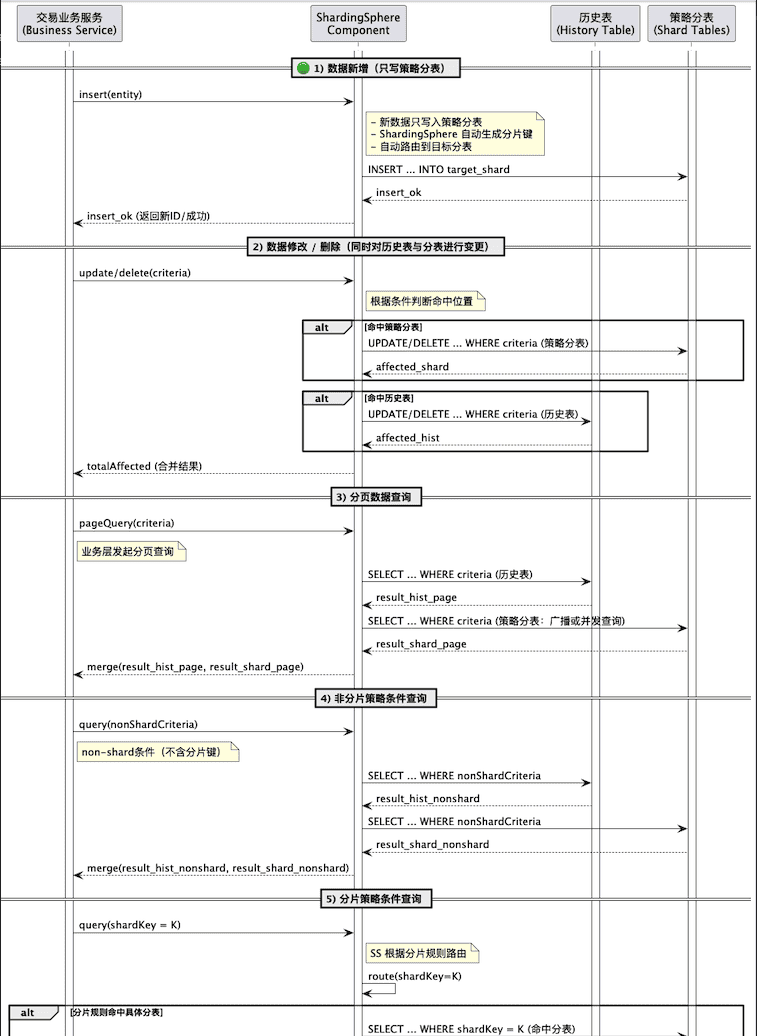

主要针对在途的业务数据,可以实现在不迁移旧表的前提条件下进行分表操作

1.新增数据只写入策略分表

2.数据查询、修改、删除同时判断命中历史表和新增的策略分表

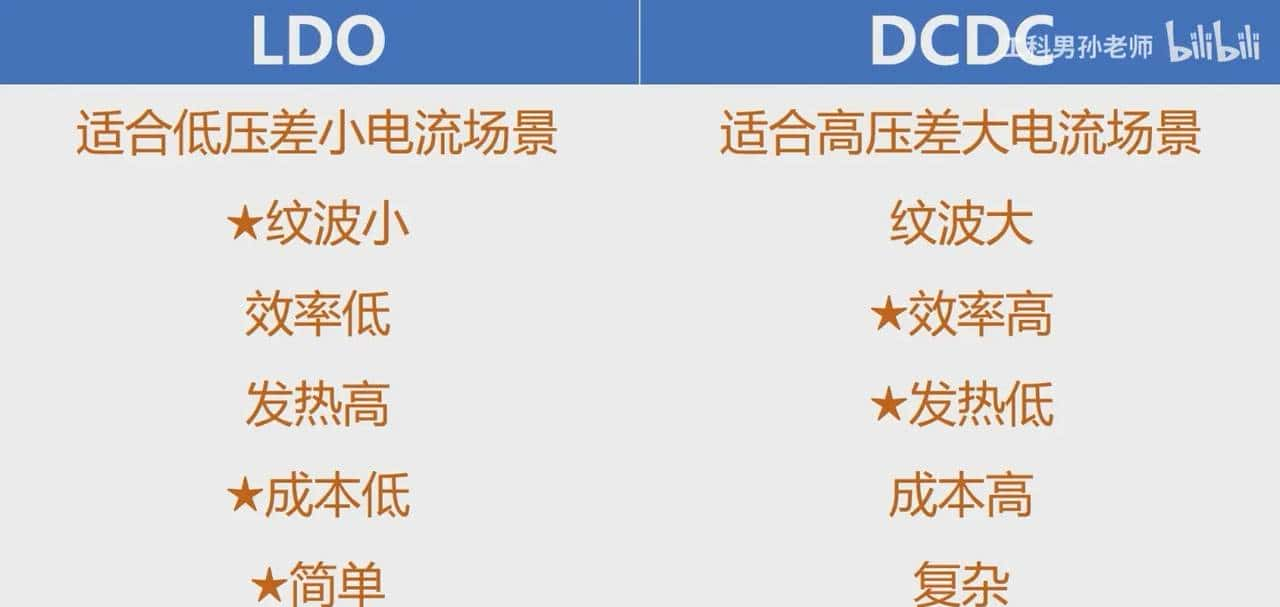

算法方案比对

业务常用分片算法对比

| 对比项 | 哈希取模(HASH_MOD) | 一致性哈希(CONSISTENT_HASH) | 时间分片(TIME_INTERVAL) |

|---|---|---|---|

| 数据分布均匀性 | 哈希均匀 | 虚拟节点调优,相对均匀 | 时间集中,可能存在热点数据分表问题 |

| 可扩容性 | 需全量迁移 | 平滑扩容,但是仍需要迁移数据 | 自然扩容 |

| 官方支持度 | 组件内置实现 | 需要自定义实现 | 组件内置实现 |

| 分片键要求 | 哈希型字段(如ID) | 哈希型字段(如ID) | 时间字段(如create_time) |

| 使用场景 | 适用于分表数量固定,在设计阶段就预设了分表方案的场景(旧表新增分表或者新增子表都需要全量数据迁移) | 适用于分表数量固定,在设计阶段就预设了分表方案的场景(旧表新增分表或者新增子表都需要数据迁移,非全量) | 适用于分表数量动态,时间区分度高,设计阶段预设分表或者需要对历史表进行分表的场景(不需要对历史数据迁移) |

| 使用注意事项 | hash字段尽量选择字符串类型 | hash字段尽量选择字符串类型 | 时间选择创建时间,业务上不允许修改创建时间数据 |

shardingsphere支持内置的分片算法

| 算法类型 | 说明 |

|---|---|

| INLINE | 表达式计算分片,简单直观 |

| HASH_MOD | 哈希取模分片,适合字符串或长整型主键 |

| MOD | 整数取模分片 |

| RANGE | 按区间分片(时间或范围场景) |

| VOLUME_RANGE | 按数据量大小自动区间分片 |

| BOUNDARY_RANGE | 自定义边界值分片 |

| AUTO | 自动分片(实验性) |

| COMPLEX_INLINE | 多字段内联分片 |

组件依赖版本

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.33</version>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.5.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>shardingsphere-jdbc-core-spring-boot-starter</artifactId>

<version>5.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>shardingsphere-standalone-mode-repository-jdbc-h2</artifactId>

<version>5.2.1</version>

</dependency>

<dependency>

<groupId>com.h2database</groupId>

<artifactId>h2</artifactId>

<version>2.2.224</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.yaml</groupId>

<artifactId>snakeyaml</artifactId>

<version>1.33</version>

</dependency>

测试库表结构

CREATE TABLE `t_order` (

`id` varchar(50) NOT NULL,

`order_id` varchar(100) NOT NULL DEFAULT '' COMMENT '订单ID',

`user_id` bigint(20) NOT NULL COMMENT '用户ID',

`amount` decimal(10,2) NOT NULL COMMENT '金额',

`status` int(11) DEFAULT '0' COMMENT '状态',

`create_time` datetime DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`update_time` datetime DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间',

PRIMARY KEY (`id`),

KEY `idx_user_id` (`user_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

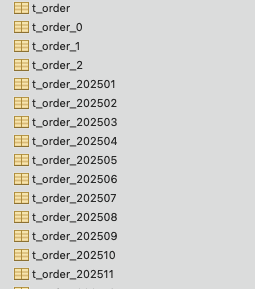

hash分表:t_order_0、t_order_1、t_order_2、t_order_3

时间分表:t_order_202501、t_order_202502、t_order_202503、......

基于哈希取模分片

说明

对分片键(如 order_id)取哈希,再对分表数取模:

tableIndex = hash(order_id) % N

🟢 该分片算法计算简单(O(1) 取模),哈希函数天然均匀分布,但是扩容困难,当分片数量 N 改变时,所有旧数据的取模结果都会变化。

举例:N=4 → N=5 时,几乎所有 key 的表位置都会改变,意味着全量迁移。

该方案对历史表兼容性较差,如果要对历史表添加分表逻辑,需要对全量历史数据进行迁移,更适用于分表数固定,新建库表的场景

shardingsphere.yml配置

spring:

shardingsphere:

mode:

type: Standalone

props:

sql-show: true # 控制台打印SQL

datasource:

names: ds0

ds0:

type: com.zaxxer.hikari.HikariDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

jdbc-url: jdbc:mysql://192.168.xxx.xxx:xxxx/gpx_basic?useUnicode=true&characterEncoding=UTF-8&serverTimezone=GMT%2B8&useSSL=false

username: xxxx

password: xxxxxx

rules:

sharding:

tables:

t_order:

actual-data-nodes: ds0.t_order_$->{0..3}

table-strategy:

standard:

sharding-column: order_id

sharding-algorithm-name: order-hash-mod

# 分片算法定义

sharding-algorithms:

#哈希取模分片,适合字符串或长整型主键

order-hash-mod:

type: HASH_MOD

props:

sharding-count: 4 # 分表总数,需与 actual-data-nodes 数量一致

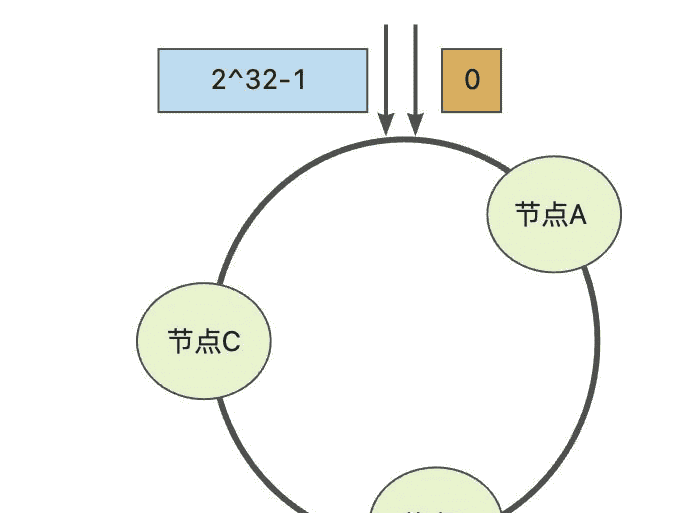

基于自定义一致性哈希算法取模分片

说明

将所有分表映射到一个环(Hash Ring)上,键根据其哈希值定位到最近的节点(顺时针方向)。

扩容时,只会影响部分数据映射(平滑迁移)。

🟢 该方案是为了解决基于原生的哈希取模分片算法不能兼容历史表数据而衍生出的实现,使用该方案可以实现不修改历史表的前提下实现分表,即新数据写入新的分表,查询,变更、删除同时操作历史表和分表。

支持平滑扩容/缩容:新增/删除分表时,仅少量 key 映射会变化(仍然需要迁移),性能略低于取模(但差距通常 <5%)。

自定义算法实现

/**

* 通用一致性哈希分片算法(Standard)。

*

* props 支持参数(全部可选,下面给出默认值):

* - virtual-nodes-num: 虚拟节点数(int,默认 128)

* - shard-table-regex: 参与哈希环的表名正则(默认 ".*_d+$" 即匹配以 _数字 结尾的表)

* - hash-algo: "crc32" 或 "crc32-unsigned"(目前实现 crc32,保留扩展口子)

*

* 行为概要:

* - 环中只包含匹配 shard-table-regex 的物理表(例如 t_order_0..t_order_3);

* - 精确路由(Precise) 根据 value 的 hash 定位到单个分表(写入落到分表);

* - 范围/无分片键 查询返回所有 availableTargetNames(包括旧表),由 ShardingSphere 广播查询。

*/

public final class ConsistentHashShardingAlgorithm implements StandardShardingAlgorithm<Comparable<?>> {

private int virtualNodes = 128;

private String shardTableRegex = ".*_d+$";

private final SortedMap<Long, String> ring = new ConcurrentSkipListMap<>();

@Override

public void init(final Properties props) {

if (props == null) return;

String v = props.getProperty("virtual-nodes-num");

if (v != null) {

try {

virtualNodes = Integer.parseInt(v);

} catch (NumberFormatException ignored) {

}

}

String regex = props.getProperty("shard-table-regex");

if (regex != null && regex.trim().length() > 0) {

shardTableRegex = regex.trim();

}

// 预留 hash-algo 支持(当前仅 CRC32)

}

/**

* 精确路由 —— 走哈希环,只包含匹配规则的分表,确保写入落到分表(非 legacy 表)

*/

@Override

public String doSharding(final Collection<String> availableTargetNames,

final PreciseShardingValue<Comparable<?>> shardingValue) {

if (availableTargetNames == null || availableTargetNames.isEmpty()) {

throw new IllegalArgumentException("availableTargetNames is empty.");

}

// 只挑分表构建 ring

Collection<String> shardTables = filterShardTables(availableTargetNames);

ensureRingBuilt(shardTables);

Object v = shardingValue.getValue();

if (v == null) {

// 若无法精确路由(值为 null),返回环上的第一个分表以保证可写(也可以改成抛异常)

if (ring.isEmpty()) {

// 如果 ring 也为空,降级返回 availableTargetNames 的第一个

for (String s : availableTargetNames) {

return s;

}

}

return ring.get(ring.firstKey());

}

long keyHash = hash(String.valueOf(v));

SortedMap<Long, String> tail = ring.tailMap(keyHash);

if (!tail.isEmpty()) {

return tail.get(tail.firstKey());

}

return ring.get(ring.firstKey());

}

/**

* 范围查询 / 无分片键查询:返回所有 availableTargetNames(包含 legacy 表与分表),

* 由 ShardingSphere 做广播查询,这样能同时读到旧表和分表数据。

*/

@Override

public Collection<String> doSharding(final Collection<String> availableTargetNames,

final RangeShardingValue<Comparable<?>> rangeShardingValue) {

return availableTargetNames;

}

@Override

public Properties getProps() {

return new Properties();

}

@Override

public String getType() {

// CLASS_BASED 模式下 algorithmClassName 会被反射创建,此处返回任意字符串

return "CLASS_BASED";

}

// ---------------- helper ----------------

/**

* 根据 shardTableRegex 过滤出物理分表(例如 t_order_0,t_order_1...)

*/

private Collection<String> filterShardTables(final Collection<String> targets) {

List<String> list = new ArrayList<String>();

if (targets == null) return list;

for (String t : targets) {

if (t == null) continue;

try {

if (t.matches(shardTableRegex)) {

list.add(t);

}

} catch (Exception ignored) {

}

}

return list;

}

/**

* 根据当前分表集合构建一致性哈希环(使用 CRC32)

* 若 ring 为空或目标分表数量发生变化则重建

*/

private void ensureRingBuilt(final Collection<String> shardTables) {

synchronized (ring) {

if (shardTables == null || shardTables.isEmpty()) {

ring.clear();

return;

}

int expectedSlotCount = shardTables.size() * Math.max(1, virtualNodes);

if (ring.isEmpty() || ring.size() != expectedSlotCount) {

ring.clear();

for (String t : shardTables) {

for (int i = 0; i < virtualNodes; i++) {

String vnode = t + "#" + i;

ring.put(hash(vnode), t);

}

}

}

}

}

/**

* CRC32 hash -> unsigned long

*/

private static long hash(final String key) {

CRC32 crc = new CRC32();

byte[] bytes = key.getBytes();

crc.update(bytes, 0, bytes.length);

return crc.getValue() & 0xffffffffL;

}

}

shardingsphere.yml配置

spring:

shardingsphere:

mode:

type: Standalone

props:

sql-show: true # 控制台打印SQL

datasource:

names: ds0

ds0:

type: com.zaxxer.hikari.HikariDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

jdbc-url: jdbc:mysql://192.168.8.240:20032/gpx_basic?useUnicode=true&characterEncoding=UTF-8&serverTimezone=GMT%2B8&useSSL=false

username: xxxx

password: xxxxxx

rules:

sharding:

tables:

t_order:

actual-data-nodes: ds0.t_order,ds0.t_order_$->{0..3} # # 同时包含旧表和分表,查询可以命中旧表;写时算法只选择分表

table-strategy:

standard:

sharding-column: order_id

sharding-algorithm-name: order-consistent

# 分片算法定义

sharding-algorithms:

#自定义一致性hash算法实现

order-consistent:

type: CLASS_BASED

props:

strategy: STANDARD # strategy: STANDARD 告诉 ShardingSphere 这个自定义类用于标准分片(single key → StandardShardingAlgorithm)。若你实现的是复合键(ComplexKeysShardingAlgorithm)则应写 strategy: COMPLEX 并实现对应接口。

algorithmClassName: com.example.sharding.config.ConsistentHashShardingAlgorithm

virtual-nodes-num: 128 #虚拟节点数量,增加分布均匀度(建议 32~128)

shard-table-regex: "^t_order_d+$" # 可配置,类会用它来筛分表名,为了实现分库时新增只操作分表不操作旧表

基于时间取模分片

说明

可以基于年份、月分、周进行数据分片

🟢天然支持扩容,并且shardingsphere方案可以兼容历史数据,但是容易产生数据分布不均的问题

shardingsphere.yml配置

spring:

shardingsphere:

mode:

type: Standalone

props:

sql-show: true

datasource:

names: ds0

ds0:

type: com.zaxxer.hikari.HikariDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

jdbc-url: jdbc:mysql://192.168.xxx.xxx:xxxx/gpx_basic?useUnicode=true&characterEncoding=UTF-8&serverTimezone=GMT%2B8&useSSL=false

username: xxxx

password: xxxxxxxx

rules:

sharding:

tables:

t_order:

# 实际表节点:主表 + 按月分表

actual-data-nodes: ds0.t_order,ds0.t_order_${202501..202512}

# 表分片策略:按创建时间分表

table-strategy:

standard:

sharding-column: create_time

sharding-algorithm-name: order-time-interval

# ✅ 分片算法定义(使用 INTERVAL,而不是 INLINE)

sharding-algorithms:

order-time-interval:

type: INTERVAL

props:

datetime-pattern: "yyyy-MM-dd HH:mm:ss"

datetime-lower: "2025-01-01 00:00:00"

datetime-upper: "2030-12-31 23:59:59"

sharding-suffix-pattern: "yyyyMM"

datetime-interval-unit: "MONTHS"

datetime-interval-amount: 1

功能验证点

基于orderId hash分片写入-验证通过

/**

* 1) 验证数据新增到不同分表(不手填 orderId,shardingsphere 自动生成并路由)

* POST http://localhost:8080/orders/validate/insert-auto-orderid

*/

@PostMapping("/validate/shardingByOrderId/insert-auto-orderid")

public Map<String, Object> insertAutoOrderId(@RequestParam(defaultValue = "20") int count) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

// 时间窗口起点

LocalDateTime start = LocalDateTime.now();

int inserted = 0;

for (int i = 0; i < count; i++) {

Order o = new Order();

// 不要设置 o.setOrderId(...)

o.setOrderId(UUID.randomUUID().toString().replace("-", ""));

o.setUserId(1000L + i);

o.setAmount(new BigDecimal("9.99"));

o.setStatus(0);

o.setCreateShardTime(LocalDateTime.now());

o.setUpdateTime(LocalDateTime.now());

inserted += orderMapper.insert(o);

}

res.put("inserted", inserted);

res.put("startWindow", start.toString());

res.put("success", true);

return res;

}

基于createShardTime 时间分片写入-验证通过

/**

* 1) 验证数据新增到不同分表(不手填 orderId,shardingsphere 自动生成并路由)

* POST http://localhost:8080/orders/validate/insert-auto-orderid

*/

@PostMapping("/validate/shardingByCreateTime/insert-auto-createTime")

public Map<String, Object> insertAutoCreateTime() {

Map<String, Object> result = new HashMap<>();

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

SimpleDateFormat tableSdf = new SimpleDateFormat("yyyyMM");

try {

// 创建不同月份的订单

String[] dates = {

// 1月

"2025-01-10", "2025-01-20",

// 2月

"2025-02-05", "2025-02-15",

// 3月

"2025-03-08", "2025-03-25",

// 10月(当前月)

"2025-10-01", "2025-10-15"

};

int count = 0;

for (int i = 0; i < dates.length; i++) {

Date createTime = sdf.parse(dates[i]);

LocalDateTime ldt = LocalDateTime.ofInstant(createTime.toInstant(), ZoneId.systemDefault());

Order order = orderService.create((long) (i + 1), new BigDecimal(100 * (i + 1)), ldt);

String table = "t_order_" + tableSdf.format(createTime);

System.out.println("订单ID: " + order.getOrderId() + " -> 分片表: " + table + " (时间: " + dates[i] + ")");

count++;

}

result.put("success", true);

result.put("message", "创建" + count + "个订单,分布在不同月份的表中");

result.put("count", count);

} catch (Exception e) {

result.put("success", false);

result.put("message", e.getMessage());

}

return result;

}

/**

* 创建订单(带时间参数)

*/

public Order create(Long userId, BigDecimal amount, LocalDateTime createTime) {

Order order = new Order();

order.setUserId(userId);

order.setAmount(amount);

order.setStatus(0);

order.setCreateShardTime(createTime);

order.setUpdateTime(createTime);

orderMapper.insert(order);

return order;

}

验证跨表条件数据变更-验证通过

/**

* 2) 验证跨表条件数据变更(按时间窗口或状态跨表更新)

* PUT http://localhost:8080/orders/validate/update-status-window

*/

@PutMapping("/validate/update-status-window")

public Map<String, Object> updateStatusWindow(@RequestParam(defaultValue = "4") int tableCount,

@RequestParam(defaultValue = "1") Integer status,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime start) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

if (start == null) {

// 默认取近 5 分钟

start = LocalDateTime.now().minusMinutes(5);

}

LambdaUpdateWrapper<Order> uw = new LambdaUpdateWrapper<Order>()

.ge(Order::getCreateShardTime, start)

.set(Order::getStatus, status);

int affected = orderMapper.update(null, uw);

res.put("affected", affected);

res.put("startWindow", start.toString());

res.put("success", true);

return res;

}

验证跨表条件数据变更(按时间窗口或状态跨表更新)-验证通过

/**

* 2) 验证跨表条件数据变更(按时间窗口或状态跨表更新)

* PUT http://localhost:8080/orders/validate/update-status-window

*/

@PutMapping("/validate/update-status-window")

public Map<String, Object> updateStatusWindow(@RequestParam(defaultValue = "4") int tableCount,

@RequestParam(defaultValue = "1") Integer status,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime start) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

if (start == null) {

// 默认取近 5 分钟

start = LocalDateTime.now().minusMinutes(5);

}

LambdaUpdateWrapper<Order> uw = new LambdaUpdateWrapper<Order>()

.ge(Order::getCreateShardTime, start)

.set(Order::getStatus, status);

int affected = orderMapper.update(null, uw);

res.put("affected", affected);

res.put("startWindow", start.toString());

res.put("success", true);

return res;

}

验证跨表列表查询(条件:状态/时间区间)-验证通过

/**

* 3) 验证跨表列表查询(条件:状态/时间区间)

* GET http://localhost:8080/orders/validate/list

*/

@GetMapping("/validate/list")

public Map<String, Object> list(@RequestParam(required = false) Integer status,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime start,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime end) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

LambdaQueryWrapper<Order> qw = new LambdaQueryWrapper<Order>();

if (status != null) {

qw.eq(Order::getStatus, status);

}

if (start != null) {

qw.ge(Order::getCreateShardTime, start);

}

if (end != null) {

qw.le(Order::getCreateShardTime, end);

}

qw.orderByAsc(Order::getOrderId);

List<Order> rows = orderMapper.selectList(qw);

res.put("size", rows == null ? 0 : rows.size());

res.put("list", rows);

res.put("success", true);

return res;

}

验证跨表分页查询(条件:状态/时间区间)-验证通过

/**

* 4) 验证跨表分页查询(条件:状态/时间区间)

* GET http://localhost:8080/orders/validate/page

*/

@GetMapping("/validate/page")

public Map<String, Object> page(@RequestParam(defaultValue = "1") long pageNo,

@RequestParam(defaultValue = "10") long pageSize,

@RequestParam(required = false) Integer status,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime start,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime end) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

// MyBatis-Plus 分页插件需要手动设置分页参数

// pageNo 必须为 >=1

if (pageNo < 1) {

pageNo = 1;

}

if (pageSize < 1) {

pageSize = 10;

}

Page<Order> page = new Page<>(pageNo, pageSize, true);

LambdaQueryWrapper<Order> lambdaQueryWrapper = new LambdaQueryWrapper<Order>();

if (status != null) {

lambdaQueryWrapper.eq(Order::getStatus, status);

}

if (start != null) {

lambdaQueryWrapper.ge(Order::getCreateShardTime, start);

}

if (end != null) {

lambdaQueryWrapper.le(Order::getCreateShardTime, end);

}

// 为了兼容分页,有些分库分表中间件,仅允许单一字段全局有序分页

lambdaQueryWrapper.orderByDesc(Order::getCreateShardTime).orderByDesc(Order::getOrderId);

IPage<Order> result = orderMapper.selectPage(page, lambdaQueryWrapper);

// 构建分页响应格式

Map<String, Object> pageData = new LinkedHashMap<String, Object>();

pageData.put("records", result.getRecords());

pageData.put("current", result.getCurrent());

pageData.put("size", result.getSize());

pageData.put("pages", result.getPages());

pageData.put("total", result.getTotal());

res.put("data", pageData);

res.put("success", true);

return res;

}

验证跨表条件数据删除(按状态/时间窗口)-验证通过

/**

* 5) 验证跨表条件数据删除(按状态/时间窗口)

* DELETE http://localhost:8080/orders/validate/delete

*/

@DeleteMapping("/validate/delete")

public Map<String, Object> delete(@RequestParam(required = false) Integer status,

@RequestParam(required = false) @DateTimeFormat(pattern = "yyyy-MM-dd HH:mm:ss") LocalDateTime start) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

LambdaQueryWrapper<Order> qw = new LambdaQueryWrapper<Order>();

if (status != null) {

qw.eq(Order::getStatus, status);

}

if (start != null) {

qw.ge(Order::getCreateShardTime, start);

}

int affected = orderMapper.delete(qw);

res.put("affected", affected);

// 验证各分表剩余(按窗口)

if (start == null) {

start = LocalDateTime.now().minusMinutes(10);

}

for (int i = 0; i < TABLE_COUNT; i++) {

String t = "t_order_" + i;

int left = safeCountRecent(t, start);

res.put(t + "_recent_left", left);

}

res.put("success", true);

return res;

}

通过id查询数据(跨表)-验证通过

/**

* 6) 通过id查询数据(跨表)

* GET http://localhost:8080/orders/validate/getById?id=xxx

*/

@GetMapping("/validate/getById")

public Map<String, Object> getById(@RequestParam String id) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

Order order = orderMapper.selectById(id);

res.put("data", order.toString());

res.put("success", true);

return res;

}

验证通过分表字段查询数据-验证通过

/**

* 2) 验证通过分表字段查询数据

* GET http://localhost:8080/orders/validate/getByOrderId?orderId=xxx

*/

@GetMapping("/validate/getByOrderId")

public Map<String, Object> getByOrderId(@RequestParam String orderId) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

LambdaQueryWrapper<Order> qw = new LambdaQueryWrapper<Order>();

if (orderId != null) {

qw.eq(Order::getOrderId, orderId);

}

qw.orderByAsc(Order::getOrderId);

List<Order> rows = orderMapper.selectList(qw);

res.put("size", rows == null ? 0 : rows.size());

res.put("list", rows);

res.put("success", true);

return res;

}

验证自定义SQL(XML)查询自动分表-验证通过

OrderMapper.xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper namespace="com.bosssoft.gpmscloud.framework.test.mapper.OrderMapper">

<resultMap type="com.bosssoft.gpmscloud.framework.test.model.po.Order" id="Order">

<result property="id" column="id" jdbcType="VARCHAR"/>

<result property="orderId" column="order_id" jdbcType="VARCHAR"/>

<result property="userId" column="user_id" jdbcType="INTEGER"/>

<result property="amount" column="amount" jdbcType="DECIMAL"/>

<result property="status" column="status" jdbcType="INTEGER"/>

<result property="createShardTime" column="create_shard_time" jdbcType="TIMESTAMP"/>

<result property="updateTime" column="update_time" jdbcType="TIMESTAMP"/>

</resultMap>

<!-- 自定义 SQL,未指定分表,由 ShardingSphere 自动路由 -->

<select id="selectByUserId" resultType="com.bosssoft.gpmscloud.framework.test.model.po.Order">

SELECT * FROM t_order

WHERE user_id = #{userId}

</select>

<select id="selectInIds" resultType="com.bosssoft.gpmscloud.framework.test.model.po.Order">

SELECT * FROM t_order

WHERE id IN

<foreach collection="ids" item="id" open="(" separator="," close=")">

#{id}

</foreach>

</select>

<insert id="insertOrder">

INSERT INTO t_order(id, order_id, user_id, amount, status, create_shard_time, update_time)

VALUES (#{id}, #{orderId}, #{userId}, #{amount}, #{status}, #{createShardTime}, #{updateTime})

</insert>

</mapper>

controller

@GetMapping("/validate/checkXml/selectByUserId")

public Map<String, Object> selectByUserId(@RequestParam String userId) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

List<Order> data = orderMapper.selectByUserId(userId);

res.put("data", data);

res.put("success", true);

return res;

}

@PostMapping("/validate/checkXml/selectInIds")

public Map<String, Object> selectInIds(@RequestBody List<String> ids) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

List<Order> data = orderMapper.selectInIds(ids);

res.put("data", data);

res.put("success", true);

return res;

}

@GetMapping("/validate/checkXml/insertOrder")

public Map<String, Object> insertOrder() throws ParseException {

Map<String, Object> res = new LinkedHashMap<String, Object>();

Order o = new Order();

o.setOrderId(UUID.randomUUID().toString().replace("-", ""));

o.setUserId(999L);

o.setAmount(new BigDecimal("9.99"));

o.setStatus(0);

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

Date createTime = sdf.parse("2025-05-10");

LocalDateTime ldt = LocalDateTime.ofInstant(createTime.toInstant(), ZoneId.systemDefault());

//LocalDateTime.now()

o.setCreateShardTime(ldt);

o.setUpdateTime(LocalDateTime.now());

int data = orderMapper.insertOrder(o);

res.put("data", data);

res.put("success", true);

return res;

}

验证自定义SQL(XML) 连表查询(关联表不分表)-验证通过

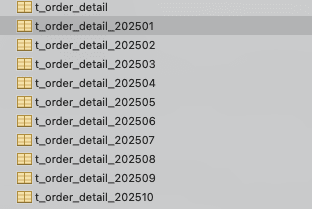

表结构

CREATE TABLE `t_order_detail` (

`id` varchar(50) NOT NULL COMMENT 'ID',

`order_id` varchar(50) NOT NULL COMMENT 'orderId',

`detail` varchar(1024) DEFAULT '' COMMENT 'detail',

`create_user_id` varchar(50) DEFAULT '' COMMENT '创建人用户ID',

`create_user_name` varchar(50) DEFAULT '' COMMENT '创建人名称',

`CREATE_TIME` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`update_user_id` varchar(50) DEFAULT '' COMMENT '修改人用户ID',

`update_user_name` varchar(50) DEFAULT '' COMMENT '修改人名称',

`UPDATE_TIME` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间',

PRIMARY KEY (`id`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='订单详情表';

mapper

<select id="selectDetailByUserId" resultType="java.util.Map">

SELECT

o.*,

tod.*

FROM t_order o

LEFT JOIN t_order_detail tod

ON tod.order_id = o.order_id

WHERE o.user_id = #{userId}

</select>

controller

@GetMapping("/validate/checkXml/selectDetailByUserId")

public Map<String, Object> selectDetailByUserId(@RequestParam String userId) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

List<Map<String, Object>> data = orderMapper.selectDetailByUserId(userId);

res.put("data", data);

res.put("success", true);

return res;

}

多表分表写入+查询-验证通过

表结构

CREATE TABLE `t_order_detail` (

`id` varchar(50) NOT NULL COMMENT 'ID',

`order_id` varchar(50) NOT NULL COMMENT 'orderId',

`detail` varchar(1024) DEFAULT '' COMMENT 'detail',

`create_user_id` varchar(50) DEFAULT '' COMMENT '创建人用户ID',

`create_user_name` varchar(50) DEFAULT '' COMMENT '创建人名称',

`CREATE_TIME` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`update_user_id` varchar(50) DEFAULT '' COMMENT '修改人用户ID',

`update_user_name` varchar(50) DEFAULT '' COMMENT '修改人名称',

`UPDATE_TIME` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间',

PRIMARY KEY (`id`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COMMENT='订单详情表';

shardingsphere.yml配置

spring:

shardingsphere:

mode:

type: Standalone

props:

sql-show: true # 控制台打印SQL

datasource:

names: ds0

ds0:

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://192.168.8.240:20032/gpx_basic?useUnicode=true&characterEncoding=UTF-8&serverTimezone=GMT%2B8&useSSL=false

username: root

password: d4Y82A0Pdb

initialSize: 5

minIdle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

rules:

sharding:

#不加binding-tables join 时会出现跨分片路由效率低下 / 广播 SQL 等问题

binding-tables:

- t_order,t_order_detail

tables:

t_order:

#actual-data-nodes: ds0.t_order_$->{0..3} # # 同时包含旧表和分表,查询可以命中旧表;写时算法只选择分表

actual-data-nodes: ds0.t_order,ds0.t_order_${202501..202512}

table-strategy:

standard:

sharding-column: create_shard_time

sharding-algorithm-name: order-time-interval

t_order_detail:

actual-data-nodes: ds0.t_order_detail,ds0.t_order_detail_${202501..202512}

table-strategy:

standard:

sharding-column: create_shard_time

sharding-algorithm-name: order-time-interval

# 分片算法定义(使用 INTERVAL,而不是 INLINE)

order-time-interval:

type: INTERVAL

props:

datetime-pattern: "yyyy-MM-dd HH:mm:ss"

datetime-lower: "2025-01-01 00:00:00"

datetime-upper: "2030-12-31 23:59:59"

sharding-suffix-pattern: "yyyyMM"

datetime-interval-unit: "MONTHS"

datetime-interval-amount: 1

mapper

<select id="selectDetailByUserId" resultType="java.util.Map">

SELECT

o.*,

tod.*

FROM t_order o

LEFT JOIN t_order_detail tod

ON tod.order_id = o.order_id

WHERE o.user_id = #{userId}

</select>

controller

/**

* 1) 验证数据新增到不同分表(不手填 orderId,shardingsphere 自动生成并路由)

* POST http://localhost:8080/orders/validate/insert-auto-orderid

*/

@PostMapping("/validate/shardingByCreateTime/insert-auto-createTime")

public Map<String, Object> insertAutoCreateTime() {

Map<String, Object> result = new HashMap<>();

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

SimpleDateFormat tableSdf = new SimpleDateFormat("yyyyMM");

try {

// 创建不同月份的订单

String[] dates = {

// 1月

"2025-01-10", "2025-01-20",

// 2月

"2025-02-05", "2025-02-15",

// 3月

"2025-03-08", "2025-03-25",

// 10月(当前月)

"2025-10-01", "2025-10-15"

};

int count = 0;

for (int i = 0; i < dates.length; i++) {

Date createTime = sdf.parse(dates[i]);

LocalDateTime ldt = LocalDateTime.ofInstant(createTime.toInstant(), ZoneId.systemDefault());

Order order = orderService.create((long) (i + 1), new BigDecimal(100 * (i + 1)), ldt);

String table = "t_order_" + tableSdf.format(createTime);

System.out.println("订单ID: " + order.getOrderId() + " -> 分片表: " + table + " (时间: " + dates[i] + ")");

count++;

}

result.put("success", true);

result.put("message", "创建" + count + "个订单,分布在不同月份的表中");

result.put("count", count);

} catch (Exception e) {

e.printStackTrace();

result.put("success", false);

result.put("message", e.getMessage());

}

return result;

}

/**

* 创建订单(带时间参数)

*/

public Order create(Long userId, BigDecimal amount, LocalDateTime createTime) {

String orderId = UUID.randomUUID().toString().replace("-", "");

Order order = new Order();

order.setOrderId(orderId);

order.setUserId(userId);

order.setAmount(amount);

order.setStatus(0);

order.setCreateShardTime(createTime);

order.setUpdateTime(createTime);

orderMapper.insert(order);

OrderDetail detail = new OrderDetail();

detail.setOrderId(orderId);

detail.setDetail("Detail-" + orderId);

detail.setCreateShardTime(createTime);

orderDetailMapper.insert(detail);

return order;

}

@GetMapping("/validate/checkXml/selectDetailByUserId")

public Map<String, Object> selectDetailByUserId(@RequestParam String userId) {

Map<String, Object> res = new LinkedHashMap<String, Object>();

List<Map<String, Object>> data = orderMapper.selectDetailByUserId(userId);

res.put("data", data);

res.put("success", true);

return res;

}

暂无评论内容