基于L4T Multimedia API的多路摄像头画面拼接编码及RTSP服务

一、背景与需求1、什么是多路摄像头画面拼接?2、为什么要做这个?3、技术挑战

二、优化思路详解1、核心思想:零拷贝架构2、关键技术解析2.1、DMA缓冲区直接访问2.2、GPU内存统一管理2.3、硬件加速转换和拼接2.4、硬件编码

三、系统架构1、数据处理流程2、各模块职责2.1、摄像头采集模块 (V4l2CameraSource)2.2、画面拼接模块 (MultiCameraStreamer)2.3、视频编码模块 (HwVideoEncoder)2.4、网络服务模块

3、性能优势4、硬件要求

四、步骤简介1、环境准备2. 配置文件说明2.1、摄像头布局配置 (camera_cfg.json)2.2、RTSP服务器配置 (mk_mediakit.ini)

3. 核心代码解析3.1、摄像头初始化3.2、画面拼接核心逻辑3.3、编码和网络传输

4. 编译和运行4.1、编译命令解释4.2、运行程序4.3、观看视频流

5. 高级功能5.1、动态码率调整5.2、强制关键帧5.3、实时统计信息

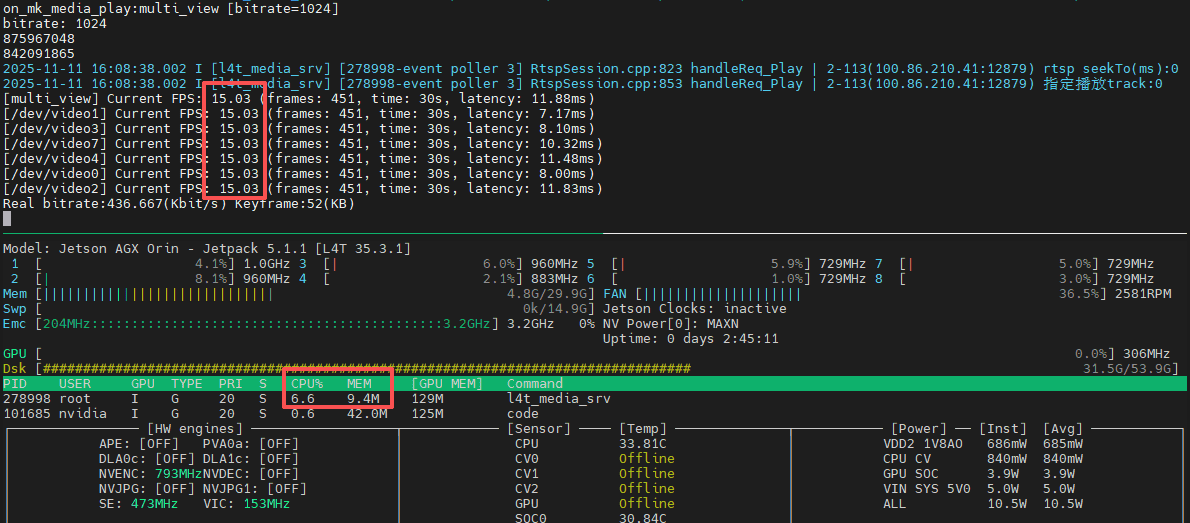

五、资源使用情况六、完整实现1、生成主程序代码2、生成主程序配置文件3、生成RTSP服务器配置文件4、编译5、运行

一、背景与需求

1、什么是多路摄像头画面拼接?

想象一下您的汽车有6个摄像头(前、后、左、右等),每个摄像头都在独立录制。多路摄像头画面拼接就是将这6个独立的视频画面智能地组合成一个大的全景画面,就像在监控中心看到的那种多画面监控屏一样。

2、为什么要做这个?

全景监控:在自动驾驶、安防监控等场景中,需要同时查看多个角度的画面节省带宽:传输1个拼接后的视频流比传输6个独立的视频流更节省网络资源降低延迟:通过硬件加速处理,减少视频处理的延迟

3、技术挑战

高性能要求:需要实时处理6个1080P视频流,对计算能力要求很高低延迟:从采集到显示的全链路延迟要尽可能小低CPU占用:不能因为视频处理而影响其他重要任务

二、优化思路详解

1、核心思想:零拷贝架构

传统视频处理就像搬箱子:每次处理都需要把数据从A地搬到B地,很耗时。我们的目标是让数据”原地处理”,减少不必要的搬运。

2、关键技术解析

2.1、DMA缓冲区直接访问

// 使用DMA缓冲区,GPU可以直接访问摄像头数据

buffer_request.memory = V4L2_MEMORY_DMABUF;

通俗解释:就像给GPU开了一个”快捷通道”,让它能直接读取摄像头数据,不需要经过CPU中转。

2.2、GPU内存统一管理

// 所有处理都在GPU内存中进行

NvBufSurf::NvAllocate(&cam_params, actual_buffer_count, input_fds.data());

通俗解释:所有视频数据都放在GPU的”工作台”上处理,不需要在CPU和GPU之间来回搬运。

2.3、硬件加速转换和拼接

// 使用GPU进行画面格式转换和拼接

NvBufSurfTransformMultiInputBufCompositeBlend(batch_surf.data(), pdstSurf, &composite_params);

通俗解释:利用GPU的专用电路来快速完成画面格式转换和拼接,就像用专业工具而不是手工操作。

2.4、硬件编码

// 使用NVIDIA硬件编码器

video_encoder_ = NvVideoEncoder::createVideoEncoder("enc0");

通俗解释:用专门的编码芯片来压缩视频,比用CPU软件编码快得多,也省电得多。

三、系统架构

1、数据处理流程

摄像头采集 → DMA缓冲区 → 格式转换 → 画面拼接 → 硬件编码 → RTSP网络传输

↑ ↑ ↑ ↑ ↑ ↑

零拷贝 零拷贝 GPU加速 GPU加速 专用芯片 网络推流

2、各模块职责

2.1、摄像头采集模块 (V4l2CameraSource)

负责从物理摄像头读取数据使用DMA缓冲区避免内存拷贝自动进行YUV422到YUV420格式转换

2.2、画面拼接模块 (MultiCameraStreamer)

按照预定义的布局将6个画面拼接成1个支持自定义每个画面的位置和大小使用GPU进行高效的图像合成

2.3、视频编码模块 (HwVideoEncoder)

使用NVIDIA硬件编码器支持H.264和H.265(HEVC)编码动态码率控制

2.4、网络服务模块

提供RTSP视频流服务支持多客户端同时观看可按需调整视频质量

3、性能优势

CPU占用极低:主要计算都由GPU和专用硬件完成内存效率高:零拷贝架构大幅减少内存带宽占用延迟低:全链路硬件加速,处理延迟在毫秒级别

4、硬件要求

NVIDIA Jetson平台(内置专用视频处理硬件)多个USB摄像头或MIPI摄像头足够的GPU内存(建议4GB以上)

四、步骤简介

1、环境准备

确保您的Jetson设备已安装:

JetPack SDK(包含L4T Multimedia API)CUDA工具包必要的开发库

2. 配置文件说明

2.1、摄像头布局配置 (camera_cfg.json)

{

"global": {

"camera_bufers": 2, // 每个摄像头的缓冲区数量

"encoder_buffers": 2, // 编码器缓冲区数量

"use_hevc": false, // 是否使用H.265编码

"fps": 15, // 输出帧率

"max_bitrate_mps": 2, // 最大码率( Mbps)

"print_interval_sec": 30 // 统计信息打印间隔

},

"layout": {

"left_front": {"x":0, "y":190, "width":432, "height":274},

// ... 其他摄像头位置配置

},

"DEV": {

"left_front": {"dev":"/dev/video1", "width":1920, "height":1080},

// ... 其他摄像头设备配置

}

}

配置说明:

layout

DEV

2.2、RTSP服务器配置 (mk_mediakit.ini)

[rtp_proxy]

lowLatency=1 # 开启低延迟模式

gop_cache=1 # 开启GOP缓存,改善播放体验

3. 核心代码解析

3.1、摄像头初始化

// 创建摄像头源实例

video_sources.push_back(std::make_unique<V4l2CameraSource>(

config.camera_configs[name].device,

config.camera_configs[name].width,

config.camera_configs[name].height));

// 初始化摄像头

video_sources[i]->Initialize(position.width, position.height);

3.2、画面拼接核心逻辑

// 等待所有摄像头都准备好新帧

waitForAllCamerasReady(video_sources);

// 从编码器获取输出缓冲区

int output_plane_index = m_encoder->deQueue(v4l2_buf);

// 获取所有摄像头的当前帧

for (int i = 0; i < video_sources.size(); i++) {

int buffer_index = video_sources[i]->GetLatestFrame(&out_dmabuf_fd, camera_ts);

// 将帧数据添加到批处理列表

batch_surf.push_back(nvbuf_surf);

}

// 使用GPU进行多画面拼接

composite_params.params.input_buf_count = buffer_index_arr.size();

ret = NvBufSurfTransformMultiInputBufCompositeBlend(batch_surf.data(), pdstSurf, &composite_params);

3.3、编码和网络传输

// 编码回调函数,将编码后的数据通过RTSP发送

static void rtspWriteCallback(const uint8_t *encoded_data, size_t data_size,

bool is_key_frame, void *user_data) {

// 创建RTSP帧

mk_frame frame = mk_frame_create(

VideoConstants::USE_HEVC ? MKCodecH265 : MKCodecH264,

obj->dts, obj->dts,

(const char *)encoded_data, data_size, NULL, NULL);

// 输入到媒体流

mk_media_input_frame(obj->media, frame);

}

4. 编译和运行

4.1、编译命令解释

g++ -o l4t_media_srv l4t_media_srv.cpp

-std=c++17

-I ../3rdparty/include

-I/usr/src/jetson_multimedia_api/include # L4T Multimedia API头文件

-I /usr/local/cuda/include # CUDA头文件

-I /usr/include/jsoncpp # JSON解析库

# ... 链接必要的库文件

-lcudart -lv4l2 -lpthread -lnvbufsurface -lnvbufsurftransform -lnvv4l2 -lmk_api -ljsoncpp

关键库说明:

libnvbufsurface.so

libnvbufsurftransform.so

libnvv4l2.so

libmk_api.so

4.2、运行程序

./l4t_media_srv DEV

4.3、观看视频流

使用VLC或其他RTSP播放器打开:

rtsp://你的Jetson设备IP:8554/live/multi_view

5. 高级功能

5.1、动态码率调整

支持通过RTSP URL参数动态调整码率:

rtsp://ip:8554/live/multi_view?bitrate=1500

这会在客户端连接时自动将码率调整为1500Kbps。

5.2、强制关键帧

当新的客户端连接时,会自动插入关键帧,减少连接延迟。

5.3、实时统计信息

程序会定期输出:

实时帧率(FPS)处理延迟实际输出码率关键帧信息

五、资源使用情况

六、完整实现

1、生成主程序代码

cat > l4t_media_srv.cpp << 'EOF'

#include <atomic>

#include <cassert>

#include <chrono>

#include <cmath>

#include <condition_variable>

#include <cstdlib>

#include <cstring>

#include <cuda_runtime.h>

#include <fcntl.h>

#include <fstream>

#include <functional>

#include <iomanip>

#include <iostream>

#include <json/json.h>

#include <linux/videodev2.h>

#include <memory>

#include <mutex>

#include <npp.h>

#include <nppi.h>

#include <pthread.h>

#include <queue>

#include <random>

#include <sstream>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <string>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <sys/poll.h>

#include <thread>

#include <time.h>

#include <unistd.h>

#include <vector>

#include "NvBufSurface.h"

#include "NvBuffer.h"

#include "NvUtils.h"

#include "NvVideoEncoder.h"

#include "nvbuf_utils.h"

#include "nvbufsurface.h"

#include "mk_mediakit.h"

// 定义相机配置结构体

struct CameraConfig {

std::string device;

int width;

int height;

};

// 定义车辆配置结构体

struct VehicleConfig {

std::map<std::string,CameraConfig> camera_configs;

};

// ============================ 常量定义============================

namespace VideoConstants {

constexpr auto PIXEL_FORMAT = V4L2_PIX_FMT_UYVY;

int IMG_BUFFER_COUNT = 2;

double DEFAULT_FPS = 15.0;

int FPS_CALCULATION_INTERVAL = 30;

bool USE_HEVC = false;

uint32_t MAX_BITRATE_MBPS = 2;

uint32_t ENCODER_BUFFER_COUNT = 1;

} // namespace VideoConstants

// ============================ 数据结构定义============================

/**

* @brief 矩形区域定义

*/

class Rect {

public:

int x, y, width, height;

Rect() = default;

Rect(int x, int y, int width, int height)

: x(x), y(y), width(width), height(height) {}

};

/**

* @brief 线程安全队列

*/

template <typename T> class ThreadSafeQueue {

private:

mutable std::mutex mutex_;

std::queue<T> queue_;

std::condition_variable cond_;

public:

ThreadSafeQueue() = default;

// 禁止拷贝

ThreadSafeQueue(const ThreadSafeQueue &) = delete;

ThreadSafeQueue &operator=(const ThreadSafeQueue &) = delete;

void push(T value) {

std::lock_guard<std::mutex> lock(mutex_);

queue_.push(std::move(value));

cond_.notify_one();

}

bool try_pop(T &value) {

std::lock_guard<std::mutex> lock(mutex_);

if (queue_.empty()) {

return false;

}

value = std::move(queue_.front());

queue_.pop();

return true;

}

bool empty() const {

std::lock_guard<std::mutex> lock(mutex_);

return queue_.empty();

}

size_t size() const {

std::lock_guard<std::mutex> lock(mutex_);

return queue_.size();

}

void clear() {

std::lock_guard<std::mutex> lock(mutex_);

std::queue<T> empty_queue;

std::swap(queue_, empty_queue);

}

};

/**

* @brief 视频缓冲区结构体

*/

struct VideoBuffer {

int in_dmabuf_fd = -1;

int out_dmabuf_fd = -1;

double timestamp = 0.0;

bool is_in_use = false;

};

/**

* @brief 线程间共享数据结构

*/

struct SharedVideoData {

int device_fd = -1;

std::vector<VideoBuffer> buffers;

std::atomic<bool> is_capturing{false};

std::atomic<int> latest_buffer_index{-1};

std::atomic<bool> frame_updated{false};

std::atomic<int> buffers_in_use_count{0};

std::mutex buffer_mutex;

};

/**

* @brief 视口配置结构体

*/

struct ViewportConfig {

std::string name;

Rect position;

};

/**

* @brief FPS统计结构体

*/

struct FpsStatistics {

unsigned int frame_count = 0;

std::chrono::steady_clock::time_point last_calc_time;

std::string name;

double current_fps = 0.0;

};

// ============================ 工具函数 ============================

namespace VideoUtils {

/**

* @brief 获取高精度当前时间(秒)

*/

inline double GetHighResolutionTime() {

struct timespec time_spec;

clock_gettime(CLOCK_MONOTONIC, &time_spec);

return static_cast<double>(time_spec.tv_sec) +

static_cast<double>(time_spec.tv_nsec) / 1e9;

}

/**

* @brief 打印像素格式信息

*/

inline void PrintPixelFormat(uint32_t pixel_format) {

std::printf("Pixel format: %c%c%c%c

", pixel_format & 0xFF,

(pixel_format >> 8) & 0xFF, (pixel_format >> 16) & 0xFF,

(pixel_format >> 24) & 0xFF);

}

/**

* @brief 获取当前时间字符串

*/

inline std::string GetCurrentTimeString() {

auto now = std::chrono::system_clock::now();

auto milliseconds = std::chrono::duration_cast<std::chrono::milliseconds>(

now.time_since_epoch()) %

1000;

auto timer = std::chrono::system_clock::to_time_t(now);

std::tm time_info = *std::localtime(&timer);

std::ostringstream output_stream;

output_stream << std::put_time(&time_info, "%Y-%m-%d %H:%M:%S");

output_stream << '.' << std::setfill('0') << std::setw(3)

<< milliseconds.count();

return output_stream.str();

}

/**

* @brief 计算并打印FPS信息

*/

void CalculateAndPrintFps(FpsStatistics &fps_stats, double latency = 0.0) {

auto current_time = std::chrono::steady_clock::now();

fps_stats.frame_count++;

auto elapsed_seconds = std::chrono::duration_cast<std::chrono::seconds>(

current_time - fps_stats.last_calc_time)

.count();

if (elapsed_seconds >= VideoConstants::FPS_CALCULATION_INTERVAL) {

fps_stats.current_fps =

static_cast<double>(fps_stats.frame_count) / elapsed_seconds;

std::cout << "[" << fps_stats.name << "] Current FPS: " << std::fixed

<< std::setprecision(2) << fps_stats.current_fps

<< " (frames: " << fps_stats.frame_count

<< ", time: " << elapsed_seconds << "s";

if (latency > 0) {

std::cout << ", latency: " << latency << "ms";

}

std::cout << ")" << std::endl;

// 重置计数器

fps_stats.frame_count = 0;

fps_stats.last_calc_time = current_time;

}

}

/**

* @brief 转储DMA缓冲区到文件

*/

int DumpDmaBuffer(const char *file_path, int plane_count, int dmabuf_fd) {

std::FILE *file = std::fopen(file_path, "wb");

if (!file) {

std::perror("Failed to open file for dumping");

return -1;

}

for (int plane = 0; plane < plane_count; ++plane) {

NvBufSurface *nvbuf_surf = nullptr;

int ret =

NvBufSurfaceFromFd(dmabuf_fd, reinterpret_cast<void **>(&nvbuf_surf));

if (ret != 0) {

std::fclose(file);

return -1;

}

ret = NvBufSurfaceMap(nvbuf_surf, 0, plane, NVBUF_MAP_READ_WRITE);

if (ret < 0) {

std::printf("%s NvBufSurfaceMap failed

", file_path);

std::fclose(file);

return ret;

}

NvBufSurfaceSyncForCpu(nvbuf_surf, 0, plane);

for (uint32_t i = 0; i < nvbuf_surf->surfaceList->planeParams.height[plane];

++i) {

std::fwrite(reinterpret_cast<char *>(

nvbuf_surf->surfaceList->mappedAddr.addr[plane]) +

i * nvbuf_surf->surfaceList->planeParams.pitch[plane],

nvbuf_surf->surfaceList->planeParams.width[plane] *

nvbuf_surf->surfaceList->planeParams.bytesPerPix[plane],

1, file);

}

ret = NvBufSurfaceUnMap(nvbuf_surf, 0, plane);

if (ret < 0) {

std::printf("%s NvBufSurfaceUnMap failed

", file_path);

std::fclose(file);

return ret;

}

}

std::fclose(file);

return 0;

}

} // namespace VideoUtils

// ============================ 错误检查宏 ============================

#define CHECK_ENCODER_API(call, expected_ret)

do {

int ret = (call);

if (ret != (expected_ret)) {

std::cerr << "编码器API调用失败: " << #call << std::endl;

std::cerr << " 文件: " << __FILE__ << " 行号: " << __LINE__

<< std::endl;

std::cerr << " 期望返回值: " << (expected_ret)

<< ", 实际返回值: " << ret << std::endl;

return false;

}

} while (0)

#define CHECK_CONDITION(condition, message)

do {

if (!(condition)) {

std::cerr << "条件检查失败: " << (message) << std::endl;

std::cerr << " 文件: " << __FILE__ << " 行号: " << __LINE__

<< std::endl;

std::cerr << " 条件: " << #condition << std::endl;

return false;

}

} while (0)

// ============================ 视频源类============================

class V4l2CameraSource {

private:

int frame_width_ = 0;

int frame_height_ = 0;

int dst_width_ = 0;

int dst_height_ = 0;

int camera_width_ = 0;

int camera_height_ = 0;

std::thread capture_thread_;

int device_fd_ = -1;

bool is_streaming_ = false;

SharedVideoData shared_data_;

FpsStatistics fps_stats_;

std::string device_filename_;

public:

V4l2CameraSource(const std::string &device_filename, int camera_width,

int camera_height)

: camera_width_(camera_width), camera_height_(camera_height),

device_filename_(device_filename) {

fps_stats_.name = device_filename_;

}

~V4l2CameraSource() { Stop(); }

// 禁止拷贝

V4l2CameraSource(const V4l2CameraSource &) = delete;

V4l2CameraSource &operator=(const V4l2CameraSource &) = delete;

bool Initialize(int dst_width, int dst_height) {

dst_width_ = dst_width;

dst_height_ = dst_height;

if (!OpenDevice())

return false;

if (!SetFormat())

return false;

if (!SetupBuffers())

return false;

if (!StartStreaming())

return false;

return true;

}

void Stop() {

// 停止采集线程

shared_data_.is_capturing = false;

if (capture_thread_.joinable()) {

capture_thread_.join();

}

// 清理资源

CleanupResources();

}

int GetLatestFrame(int *out_dmabuf_fd, double ×tamp) {

if (!shared_data_.frame_updated)

return -1;

int current_index = shared_data_.latest_buffer_index;

if (current_index < 0 ||

current_index >= static_cast<int>(shared_data_.buffers.size())) {

return -1;

}

shared_data_.frame_updated = false;

timestamp = shared_data_.buffers[current_index].timestamp;

*out_dmabuf_fd = shared_data_.buffers[current_index].out_dmabuf_fd;

return current_index;

}

bool isFrameReady() {

if (!shared_data_.frame_updated)

return false;

int current_index = shared_data_.latest_buffer_index;

if (current_index < 0 ||

current_index >= static_cast<int>(shared_data_.buffers.size())) {

return false;

}

return true;

}

bool ReleaseBuffer(int buffer_index) {

if (buffer_index < 0 ||

buffer_index >= static_cast<int>(shared_data_.buffers.size())) {

return false;

}

std::lock_guard<std::mutex> lock(shared_data_.buffer_mutex);

shared_data_.buffers[buffer_index].is_in_use = false;

shared_data_.buffers_in_use_count--;

return true;

}

int GetWidth() const { return frame_width_; }

int GetHeight() const { return frame_height_; }

private:

bool OpenDevice() {

device_fd_ = open(device_filename_.c_str(), O_RDWR | O_NONBLOCK);

if (device_fd_ < 0) {

std::printf("Failed to open device: %s

", device_filename_.c_str());

return false;

}

return true;

}

bool SetFormat() {

struct v4l2_format format = {};

format.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (ioctl(device_fd_, VIDIOC_G_FMT, &format) < 0) {

std::perror("Failed to get current format");

return false;

}

format.fmt.pix.width = camera_width_;

format.fmt.pix.height = camera_height_;

format.fmt.pix.pixelformat = VideoConstants::PIXEL_FORMAT;

format.fmt.pix.field = V4L2_FIELD_NONE;

if (ioctl(device_fd_, VIDIOC_S_FMT, &format) < 0) {

std::perror("Failed to set format");

VideoUtils::PrintPixelFormat(VideoConstants::PIXEL_FORMAT);

return false;

}

frame_width_ = format.fmt.pix.width;

frame_height_ = format.fmt.pix.height;

return true;

}

bool SetupBuffers() {

struct v4l2_requestbuffers buffer_request = {};

buffer_request.count = VideoConstants::IMG_BUFFER_COUNT;

buffer_request.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buffer_request.memory = V4L2_MEMORY_DMABUF;

if (ioctl(device_fd_, VIDIOC_REQBUFS, &buffer_request) < 0) {

std::perror("Failed to request buffers");

return false;

}

int actual_buffer_count = std::min((int)buffer_request.count,

(int)VideoConstants::IMG_BUFFER_COUNT);

shared_data_.buffers.resize(actual_buffer_count);

shared_data_.device_fd = device_fd_;

shared_data_.is_capturing = true;

std::vector<int> input_fds(actual_buffer_count),output_fds(actual_buffer_count);

// 分配输入缓冲区

NvBufSurf::NvCommonAllocateParams cam_params = {};

cam_params.memType = NVBUF_MEM_SURFACE_ARRAY;

cam_params.width = frame_width_;

cam_params.height = frame_height_;

cam_params.layout = NVBUF_LAYOUT_PITCH;

cam_params.colorFormat = NVBUF_COLOR_FORMAT_UYVY;

cam_params.memtag = NvBufSurfaceTag_CAMERA;

if (NvBufSurf::NvAllocate(&cam_params, actual_buffer_count,

input_fds.data())) {

return false;

}

// 分配输出缓冲区

NvBufSurf::NvCommonAllocateParams output_params = {};

output_params.memType = NVBUF_MEM_SURFACE_ARRAY;

output_params.width = dst_width_;

output_params.height = dst_height_;

output_params.layout = NVBUF_LAYOUT_PITCH;

output_params.colorFormat = NVBUF_COLOR_FORMAT_YUV420;

output_params.memtag = NvBufSurfaceTag_VIDEO_CONVERT;

if (NvBufSurf::NvAllocate(&output_params, actual_buffer_count,output_fds.data())) {

return false;

}

// 设置V4L2缓冲区

for (int i = 0; i < actual_buffer_count; ++i) {

struct v4l2_buffer v4l2_buf = {};

v4l2_buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

v4l2_buf.memory = V4L2_MEMORY_DMABUF;

v4l2_buf.index = i;

v4l2_buf.m.fd = input_fds[i];

shared_data_.buffers[i].in_dmabuf_fd = input_fds[i];

shared_data_.buffers[i].out_dmabuf_fd = output_fds[i];

if (ioctl(device_fd_, VIDIOC_QBUF, &v4l2_buf) < 0) {

return false;

}

}

return true;

}

bool StartStreaming() {

enum v4l2_buf_type buffer_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (ioctl(device_fd_, VIDIOC_STREAMON, &buffer_type) < 0) {

return false;

}

is_streaming_ = true;

capture_thread_ = std::thread(&V4l2CameraSource::CaptureThread, this);

return true;

}

void CleanupResources() {

if (is_streaming_ && device_fd_ >= 0) {

enum v4l2_buf_type buffer_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

ioctl(device_fd_, VIDIOC_STREAMOFF, &buffer_type);

}

if (device_fd_ >= 0) {

close(device_fd_);

device_fd_ = -1;

}

}

void TransformFrame(int in_dmabuf_fd, int out_dmabuf_fd) {

NvBufSurf::NvCommonTransformParams transform_params = {};

transform_params.src_top = 0;

transform_params.src_left = 0;

transform_params.src_width = frame_width_;

transform_params.src_height = frame_height_;

transform_params.dst_top = 0;

transform_params.dst_left = 0;

transform_params.dst_width = dst_width_;

transform_params.dst_height = dst_height_;

transform_params.flag = static_cast<NvBufSurfTransform_Transform_Flag>(

NVBUFSURF_TRANSFORM_FILTER | NVBUFSURF_TRANSFORM_FLIP);

transform_params.flip = NvBufSurfTransform_None;

transform_params.filter = NvBufSurfTransformInter_Bilinear;

int ret =

NvBufSurf::NvTransform(&transform_params, in_dmabuf_fd, out_dmabuf_fd);

if (ret) {

std::cerr << "Error in frame transformation." << std::endl;

}

}

void CaptureThread() {

SharedVideoData &data = shared_data_;

struct pollfd poll_fd = {.fd = data.device_fd, .events = POLLIN};

fps_stats_.last_calc_time = std::chrono::steady_clock::now();

while (data.is_capturing) {

int poll_result = poll(&poll_fd, 1, 3);

if (poll_result < 0) {

std::perror("Poll failed");

break;

} else if (poll_result == 0) {

continue;

}

struct v4l2_buffer v4l2_buf = {};

v4l2_buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

v4l2_buf.memory = V4L2_MEMORY_DMABUF;

if (ioctl(data.device_fd, VIDIOC_DQBUF, &v4l2_buf) < 0) {

std::perror("Dequeue buffer failed");

continue;

}

auto input_ts = VideoUtils::GetHighResolutionTime();

int buffer_index = v4l2_buf.index;

if (buffer_index < 0 ||

buffer_index >= static_cast<int>(data.buffers.size())) {

std::printf("Invalid buffer index: %d

", buffer_index);

continue;

}

double latency = 0.0;

{

std::lock_guard<std::mutex> lock(data.buffer_mutex);

if (data.buffers_in_use_count == 0 &&

!data.buffers[buffer_index].is_in_use) {

data.latest_buffer_index = buffer_index;

data.frame_updated = true;

data.buffers[buffer_index].is_in_use = true;

data.buffers_in_use_count++;

TransformFrame(shared_data_.buffers[buffer_index].in_dmabuf_fd,

shared_data_.buffers[buffer_index].out_dmabuf_fd);

auto now = VideoUtils::GetHighResolutionTime();

latency = (now - input_ts) * 1000.0;

data.buffers[buffer_index].timestamp = now;

}

}

if (latency > 0) {

VideoUtils::CalculateAndPrintFps(fps_stats_, latency);

}

if (ioctl(data.device_fd, VIDIOC_QBUF, &v4l2_buf) < 0) {

std::perror("Re-queue buffer failed");

break;

}

}

}

};

/**

* 编码数据回调函数类型定义

* @param encoded_data 编码后的数据

* @param data_size 数据大小

* @param is_key_frame 是否为关键帧

* @param user_data 用户自定义数据

*/

using EncodedDataCallback =

std::function<void(const uint8_t *encoded_data, size_t data_size,

bool is_key_frame, void *user_data)>;

/**

* H265视频编码器类

* 封装NVIDIA硬件编码器功能,支持YUV420到H265的转换

*/

class HwVideoEncoder {

private:

// 编码状态控制

std::atomic<bool> is_encoding_done_;

std::atomic<bool> has_error_;

// 编码器核心组件

NvVideoEncoder *video_encoder_ = nullptr;

// 编码器核心组件

uint32_t frame_width_;

uint32_t frame_height_;

uint32_t frame_size_; // YUV420帧大小

bool is_initialized_;

bool is_flushing_;

// 帧计数器

uint32_t sent_frame_count_;

uint32_t received_frame_count_;

// 编码参数

uint32_t frame_rate_ = 0;

uint32_t idr_interval_ = 0;

uint32_t bitrate_ = 0;

uint32_t peak_bitrate_ = 0;

// 缓冲区管理

uint32_t output_plane_buffer_index_;

bool capture_plane_eos_received_;

// 回调函数

EncodedDataCallback data_callback_ = nullptr;

void *callback_user_data_;

// 回调函数

static constexpr uint32_t ENCODER_BUFFER_COUNT = 1;

static constexpr uint32_t MAX_BITRATE_MBPS = 2;

public:

/**

* 构造函数

* @param width 视频宽度

* @param height 视频高度

* @param frame_rate 帧率,默认15fps

* @param bitrate 码率(bps),默认1Mbps

* @param idr_interval IDR帧间隔,默认30帧

*/

HwVideoEncoder(uint32_t width, uint32_t height, uint32_t frame_rate = 15,

uint32_t bitrate = 1 * 1024 * 1024, uint32_t idr_interval = 30)

: frame_width_(width), frame_height_(height), frame_rate_(frame_rate),

idr_interval_(idr_interval), bitrate_(bitrate),

peak_bitrate_(bitrate * 2), video_encoder_(nullptr),

is_encoding_done_(false), has_error_(false), is_initialized_(false),

is_flushing_(false), sent_frame_count_(0), received_frame_count_(0),

output_plane_buffer_index_(0), capture_plane_eos_received_(false),

callback_user_data_(nullptr) {

frame_size_ = width * height * 3 / 2;

}

// 禁止拷贝和赋值

HwVideoEncoder(const HwVideoEncoder &) = delete;

HwVideoEncoder &operator=(const HwVideoEncoder &) = delete;

~HwVideoEncoder() { cleanup(); }

/**

* 设置编码数据回调函数

* @param callback 回调函数

* @param user_data 用户自定义数据

*/

void setEncodedDataCallback(EncodedDataCallback callback,

void *user_data = nullptr) {

data_callback_ = callback;

callback_user_data_ = user_data;

}

/**

* 动态设置IDR帧间隔

* @param interval IDR帧间隔(帧数)

* @return 设置成功返回true

*/

bool setIdrInterval(uint32_t interval) {

if (!video_encoder_ || !is_initialized_) {

std::cerr << "编码器未初始化,无法设置IDR间隔" << std::endl;

return false;

}

idr_interval_ = interval;

int ret = video_encoder_->setIDRInterval(interval);

if (ret != 0) {

std::cerr << "设置IDR间隔失败: " << ret << std::endl;

return false;

}

return true;

}

/**

* 动态设置码率

* @param bitrate 码率(bps)

* @param peak_bitrate 峰值码率(bps),为0时使用bitrate*2

* @return 设置成功返回true

*/

bool setBitrate(uint32_t bitrate, uint32_t peak_bitrate = 0) {

if (!video_encoder_ || !is_initialized_) {

std::cerr << "编码器未初始化,无法设置码率" << std::endl;

return false;

}

bitrate_ = bitrate;

peak_bitrate_ = (peak_bitrate > 0) ? peak_bitrate : bitrate * 2;

int ret = video_encoder_->setBitrate(bitrate_);

if (ret != 0) {

std::cerr << "设置码率失败: " << ret << std::endl;

return false;

}

return true;

}

/**

* 强制插入IDR帧

* @return 成功返回true

*/

bool forceIdrFrame() {

if (!video_encoder_ || !is_initialized_) {

std::cerr << "编码器未初始化,无法强制IDR帧" << std::endl;

return false;

}

int ret = video_encoder_->forceIDR();

if (ret != 0) {

std::cerr << "强制IDR帧失败: " << ret << std::endl;

return false;

}

return true;

}

/**

* 获取当前编码统计信息

*/

void getStats(uint32_t &sent_frames, uint32_t &received_frames) const {

sent_frames = sent_frame_count_;

received_frames = received_frame_count_;

}

/**

* 获取编码器状态

*/

bool isEncoding() const { return !is_encoding_done_ && !has_error_; }

bool hasError() const { return has_error_; }

/**

* 清理所有资源,防止内存泄漏

*/

void cleanup() {

std::cout << "清理编码器资源..." << std::endl;

if (video_encoder_ && is_initialized_) {

try {

video_encoder_->capture_plane.stopDQThread();

video_encoder_->output_plane.setStreamStatus(false);

video_encoder_->capture_plane.setStreamStatus(false);

delete video_encoder_;

} catch (const std::exception &e) {

std::cerr << "清理编码器时发生异常: " << e.what() << std::endl;

}

video_encoder_ = nullptr;

}

is_initialized_ = false;

}

/**

* 初始化编码器

* @return 初始化成功返回true,失败返回false

*/

bool initialize() {

int encoder_pixfmt =

VideoConstants::USE_HEVC ? V4L2_PIX_FMT_H265 : V4L2_PIX_FMT_H264;

try {

video_encoder_ = NvVideoEncoder::createVideoEncoder("enc0");

if (!video_encoder_)

return false;

} catch (...) {

return false;

}

if (video_encoder_->setCapturePlaneFormat(

encoder_pixfmt, frame_width_, frame_height_,

MAX_BITRATE_MBPS * 1024 * 1024) < 0 ||

video_encoder_->setOutputPlaneFormat(V4L2_PIX_FMT_YUV420M, frame_width_,

frame_height_) < 0) {

return false;

}

// 简化配置过程

if (VideoConstants::USE_HEVC) {

video_encoder_->setProfile(V4L2_MPEG_VIDEO_H265_PROFILE_MAIN10);

} else {

video_encoder_->setProfile(V4L2_MPEG_VIDEO_H264_PROFILE_HIGH);

}

video_encoder_->setBitrate(bitrate_);

video_encoder_->setRateControlMode(V4L2_MPEG_VIDEO_BITRATE_MODE_CBR);

video_encoder_->setIDRInterval(idr_interval_);

video_encoder_->setHWPresetType(V4L2_ENC_HW_PRESET_ULTRAFAST);

video_encoder_->setMaxPerfMode(true);

CHECK_ENCODER_API(video_encoder_->setNumBFrames(0), 0);

CHECK_ENCODER_API(video_encoder_->setNumReferenceFrames(0), 0);

// CHECK_ENCODER_API(video_encoder_->setIFrameInterval(idr_interval_/2), 0);

// 设置编码参数

if (video_encoder_->output_plane.reqbufs(

V4L2_MEMORY_DMABUF, VideoConstants::ENCODER_BUFFER_COUNT) ||

video_encoder_->capture_plane.setupPlane(

V4L2_MEMORY_MMAP, VideoConstants::ENCODER_BUFFER_COUNT, true,

false) < 0) {

return false;

}

video_encoder_->capture_plane.setDQThreadCallback(capturePlaneDqCallback);

if (video_encoder_->capture_plane.startDQThread(this) < 0) {

return false;

}

video_encoder_->output_plane.setStreamStatus(true);

video_encoder_->capture_plane.setStreamStatus(true);

for (uint32_t i = 0; i < video_encoder_->capture_plane.getNumBuffers(); i++) {

struct v4l2_buffer v4l2_buf;

struct v4l2_plane planes[MAX_PLANES];

memset(&v4l2_buf, 0, sizeof(v4l2_buf));

memset(planes, 0, MAX_PLANES * sizeof(struct v4l2_plane));

v4l2_buf.index = i;

v4l2_buf.m.planes = planes;

int ret = video_encoder_->capture_plane.qBuffer(v4l2_buf, NULL);

if (ret < 0) {

std::cerr << "Error while queueing buffer at capture plane" << std::endl;

return false;

}

}

is_initialized_ = true;

return true;

}

int getOutputBufferSize() {

return video_encoder_->output_plane.getNumBuffers();

}

bool setupOutputBuffer(int index, int output_plane_fd) {

if (!video_encoder_)

return false;

struct v4l2_buffer v4l2_buf = {};

struct v4l2_plane planes[MAX_PLANES] = {};

NvBuffer *buffer = video_encoder_->output_plane.getNthBuffer(index);

v4l2_buf.index = index;

v4l2_buf.m.planes = planes;

v4l2_buf.type = V4L2_BUF_TYPE_VIDEO_OUTPUT_MPLANE;

v4l2_buf.memory = V4L2_MEMORY_DMABUF;

if (video_encoder_->output_plane.mapOutputBuffers(v4l2_buf,

output_plane_fd) < 0) {

return false;

}

for (uint32_t j = 0; j < buffer->n_planes; j++) {

v4l2_buf.m.planes[j].bytesused =

buffer->planes[j].fmt.stride * buffer->planes[j].fmt.height;

}

return video_encoder_->output_plane.qBuffer(v4l2_buf, nullptr) >= 0;

}

int deQueue(struct v4l2_buffer &v4l2_buf) {

NvBuffer *buffer;

if (video_encoder_->output_plane.dqBuffer(v4l2_buf, &buffer, NULL, 10) <

0) {

std::cerr << "ERROR while DQing buffer at output plane" << std::endl;

return -1;

}

for (uint32_t j = 0; j < buffer->n_planes; j++) {

v4l2_buf.m.planes[j].bytesused =

buffer->planes[j].fmt.stride * buffer->planes[j].fmt.height;

}

return v4l2_buf.index;

}

bool queueDirect(struct v4l2_buffer &v4l2_buf) {

return video_encoder_->output_plane.qBuffer(v4l2_buf, nullptr) >= 0;

}

private:

/**

* 设置流结束标志

*/

void setupEndOfStream(struct v4l2_buffer &v4l2_buf) {

is_flushing_ = true;

v4l2_buf.m.planes[0].m.userptr = 0;

v4l2_buf.m.planes[0].bytesused = 0;

v4l2_buf.m.planes[1].bytesused = 0;

v4l2_buf.m.planes[2].bytesused = 0;

}

/**

* 停止编码过程

*/

void stopEncoding() {

is_encoding_done_ = true;

if (video_encoder_ && is_initialized_) {

video_encoder_->capture_plane.stopDQThread();

video_encoder_->capture_plane.waitForDQThread(1000);

}

}

/**

* 捕获平面DQ线程回调函数(静态)

* 处理编码完成的帧数据

*/

static bool capturePlaneDqCallback(struct v4l2_buffer *v4l2_buf,

NvBuffer *buffer, NvBuffer *shared_buffer,

void *user_data) {

// 参数验证

if (!v4l2_buf || !user_data || !buffer || !user_data) {

std::cerr << "capturePlaneDqCallback invalid" << std::endl;

return false;

}

HwVideoEncoder *encoder_instance = static_cast<HwVideoEncoder *>(user_data);

return encoder_instance->processEncodedFrame(v4l2_buf, buffer);

}

/**

* 处理编码完成的帧(成员函数)

*/

bool processEncodedFrame(struct v4l2_buffer *v4l2_buf, NvBuffer *buffer) {

if (buffer->planes[0].bytesused == 0)

return false;

// 获取编码元数据

v4l2_ctrl_videoenc_outputbuf_metadata enc_metadata;

bool is_key_frame =

(video_encoder_->getMetadata(v4l2_buf->index, enc_metadata) == 0)

? enc_metadata.KeyFrame

: false;

received_frame_count_++;

// 通过回调输出编码数据

if (data_callback_) {

for (uint32_t plane_index = 0; plane_index < buffer->n_planes;

plane_index++) {

if (buffer->planes[plane_index].bytesused > 0) {

data_callback_(reinterpret_cast<const uint8_t *>(

buffer->planes[plane_index].data),

buffer->planes[plane_index].bytesused, is_key_frame,

callback_user_data_);

}

}

}

return video_encoder_->capture_plane.qBuffer(*v4l2_buf, nullptr) >= 0;

}

};

class FrameRateController {

private:

std::chrono::steady_clock::time_point next_frame_time_;

std::chrono::microseconds frame_interval_;

public:

FrameRateController(double fps)

: frame_interval_(static_cast<int64_t>(1000000.0 / fps)) {

next_frame_time_ = std::chrono::steady_clock::now();

}

void waitNextFrame() {

next_frame_time_ += frame_interval_;

std::this_thread::sleep_until(next_frame_time_);

}

};

// 帧同步

bool waitForAllCamerasReady(

std::vector<std::unique_ptr<V4l2CameraSource>> &video_sources,

int timeout_ms = 33) {

auto start_time = std::chrono::steady_clock::now();

while (std::chrono::duration_cast<std::chrono::milliseconds>(

std::chrono::steady_clock::now() - start_time)

.count() < timeout_ms) {

bool all_ready = true;

for (auto &source : video_sources) {

if (!source->isFrameReady()) {

all_ready = false;

break;

}

}

if (all_ready)

return true;

std::this_thread::sleep_for(std::chrono::milliseconds(1));

}

return false;

}

struct MultiCameraStreamer {

mk_media media = nullptr;

int dts = 0;

HwVideoEncoder *m_encoder;

float total_encode_size = 0;

double last_ts=0;

std::map<std::string, VehicleConfig> vehicles;

std::vector<ViewportConfig> viewport_configs;

std::vector<std::unique_ptr<V4l2CameraSource>> video_sources;

const int output_width = 1920;

const int output_height = 1080;

std::vector<int> fds;

int output_buffer_size;

NvBufSurfTransformCompositeBlendParamsEx composite_params;

FpsStatistics main_fps_stats = {0};

static void rtspWriteCallback(const uint8_t *encoded_data, size_t data_size,

bool is_key_frame, void *user_data) {

MultiCameraStreamer *obj = (MultiCameraStreamer *)(user_data);

double now = VideoUtils::GetHighResolutionTime();

obj->total_encode_size += data_size;

float duration = now - obj->last_ts;

if (is_key_frame && duration > VideoConstants::FPS_CALCULATION_INTERVAL) {

float real_bitrate=obj->total_encode_size * 8 / duration / 1024;

printf("Real bitrate:%.3f(Kbit/s) Keyframe:%ld(KB)

",

real_bitrate,data_size / 1024);

obj->last_ts = now;

obj->total_encode_size = 0;

}

mk_frame frame = mk_frame_create(

VideoConstants::USE_HEVC ? MKCodecH265 : MKCodecH264, obj->dts,

obj->dts, (const char *)encoded_data, data_size, NULL, NULL);

obj->dts += (1000 / VideoConstants::DEFAULT_FPS);

mk_media_input_frame(obj->media, frame);

mk_frame_unref(frame);

}

bool Open(std::string device_type) {

main_fps_stats.last_calc_time = std::chrono::steady_clock::now();

main_fps_stats.name = "multi_view";

const char *jsonConfigFile = "camera_cfg.json";

std::ifstream configFile(jsonConfigFile);

if (!configFile.is_open()) {

printf("Failed to open JSON config file: %s

", jsonConfigFile);

return false;

}

Json::Value root;

Json::Reader reader;

if (!reader.parse(configFile, root)) {

printf("Failed to parse JSON config file:

");

return false;

}

for (auto name : root.getMemberNames()) {

if(name=="global")

{

Json::Value value = root[name];

VideoConstants::IMG_BUFFER_COUNT = value["camera_bufers"].asInt();

VideoConstants::ENCODER_BUFFER_COUNT = value["encoder_buffers"].asInt();

VideoConstants::USE_HEVC = value["use_hevc"].asBool();

VideoConstants::DEFAULT_FPS = value["fps"].asInt();

VideoConstants::MAX_BITRATE_MBPS = value["max_bitrate_mps"].asInt();

VideoConstants::FPS_CALCULATION_INTERVAL = value["print_interval_sec"].asInt();

}

else if(name=="layout")

{

Json::Value value = root[name];

int i=0;

for (auto cam_name : value.getMemberNames())

{

viewport_configs.push_back({cam_name,

Rect(value[cam_name]["x"].asInt(),

value[cam_name]["y"].asInt(),

value[cam_name]["width"].asInt(),

value[cam_name]["height"].asInt())});

i+=1;

}

}

else

{

Json::Value vehicle_node = root[name];

VehicleConfig config;

for (auto cam_name : vehicle_node.getMemberNames())

{

config.camera_configs[cam_name].device = vehicle_node[cam_name]["dev"].asString();

config.camera_configs[cam_name].width = vehicle_node[cam_name]["width"].asInt();

config.camera_configs[cam_name].height = vehicle_node[cam_name]["height"].asInt();

}

vehicles[name] = config;

}

}

if (vehicles.find(device_type) == vehicles.end()) {

printf("cant find:%s

", device_type.c_str());

return false;

}

VehicleConfig &config = vehicles[device_type];

std::vector<std::string> camera_names={"left_front","left_rear","rear","right_rear","right_front","front"};

for(auto name:camera_names)

{

video_sources.push_back(std::make_unique<V4l2CameraSource>(

config.camera_configs[name].device, config.camera_configs[name].width,

config.camera_configs[name].height));

}

int viewport_size = viewport_configs.size();

for (int i = 0; i < viewport_size; i++) {

auto position = viewport_configs[i].position;

video_sources[i]->Initialize(position.width, position.height);

}

codec_args v_args = {0};

media = mk_media_create("__defaultVhost__", "live", "multi_view", 0, 0, 0);

auto v_track = mk_track_create(VideoConstants::USE_HEVC ? MKCodecH265 : MKCodecH264, &v_args);

mk_media_init_track(media, v_track);

mk_media_init_complete(media);

m_encoder = new HwVideoEncoder(output_width, output_height,

VideoConstants::DEFAULT_FPS, 2024 * 1024,VideoConstants::DEFAULT_FPS);

m_encoder->setEncodedDataCallback(rtspWriteCallback, this);

if (!m_encoder->initialize()) {

std::cerr << "m_encoder->initialize error" << std::endl;

return false;

}

output_buffer_size = m_encoder->getOutputBufferSize();

fds.resize(output_buffer_size);

NvBufSurf::NvCommonAllocateParams camparams_420p = {0};

camparams_420p.memType = NVBUF_MEM_SURFACE_ARRAY;

camparams_420p.width = output_width;

camparams_420p.height = output_height;

camparams_420p.layout = NVBUF_LAYOUT_PITCH;

camparams_420p.colorFormat = NVBUF_COLOR_FORMAT_YUV420;

camparams_420p.memtag = NvBufSurfaceTag_VIDEO_ENC;

if (NvBufSurf::NvAllocate(&camparams_420p, output_buffer_size,fds.data())) {

perror("Failed to create fd_420p NvBuffer");

return false;

}

for (int i = 0; i < output_buffer_size; i++) {

if (!m_encoder->setupOutputBuffer(i, fds[i])) {

std::cerr << "帧编码失败: " << std::endl;

return false;

}

}

memset(&composite_params, 0, sizeof(composite_params));

composite_params.params.composite_blend_flag =NVBUFSURF_TRANSFORM_COMPOSITE;

composite_params.dst_comp_rect = static_cast<NvBufSurfTransformRect *>(malloc(sizeof(NvBufSurfTransformRect) * 6));

composite_params.src_comp_rect = static_cast<NvBufSurfTransformRect *>(malloc(sizeof(NvBufSurfTransformRect) * 6));

composite_params.params.composite_blend_filter =NvBufSurfTransformInter_Algo3;

for (size_t i = 0; i < video_sources.size(); i++) {

auto position = viewport_configs[i].position;

composite_params.src_comp_rect[i].left = 0;

composite_params.src_comp_rect[i].top = 0;

composite_params.src_comp_rect[i].width =position.width;

composite_params.src_comp_rect[i].height =position.height;

composite_params.dst_comp_rect[i].left = position.x;

composite_params.dst_comp_rect[i].top = position.y;

composite_params.dst_comp_rect[i].width =position.width;

composite_params.dst_comp_rect[i].height =position.height;

}

return true;

}

bool Run() {

FrameRateController fps_controller(VideoConstants::DEFAULT_FPS);

struct v4l2_buffer v4l2_buf;

struct v4l2_plane planes[MAX_PLANES];

while (1) {

fps_controller.waitNextFrame();

waitForAllCamerasReady(video_sources);

memset(&v4l2_buf, 0, sizeof(v4l2_buf));

memset(planes, 0, sizeof(planes));

v4l2_buf.m.planes = planes;

int output_plane_index = m_encoder->deQueue(v4l2_buf);

if (output_plane_index < 0) {

printf("deQueue error

");

continue;

}

std::vector<int> buffer_index_arr;

std::vector<int> index_arr;

std::vector<NvBufSurface *> batch_surf;

NvBufSurface *pdstSurf;

int ret =NvBufSurfaceFromFd(fds[output_plane_index], (void **)(&pdstSurf));

if (ret != 0) {

printf("NvBufSurfaceFromFd error:%d

",ret);

return -1;

}

double camera_ts;

bool flag=true;

for (int i = 0; i < video_sources.size(); i++) {

int out_dmabuf_fd;

int buffer_index =video_sources[i]->GetLatestFrame(&out_dmabuf_fd, camera_ts);

if (buffer_index <0 ) {

printf("%d GetLatestFrame error:%d

",i,buffer_index);

flag=false;

break;

}

NvBufSurface *nvbuf_surf = 0;

int ret = NvBufSurfaceFromFd(out_dmabuf_fd, (void **)(&nvbuf_surf));

if (ret != 0) {

printf("NvBufSurfaceFromFd error:%d

",ret);

flag=false;

break;

}

buffer_index_arr.push_back(buffer_index);

index_arr.push_back(i);

batch_surf.push_back(nvbuf_surf);

}

if(flag)

{

composite_params.params.input_buf_count = buffer_index_arr.size();

ret = NvBufSurfTransformMultiInputBufCompositeBlend(batch_surf.data(), pdstSurf, &composite_params);

if (ret) {

std::cerr << "111 Error in NvBufferComposite:" << ret << std::endl;

return -1;

}

}

for (int i = 0; i < buffer_index_arr.size(); i++) {

video_sources[index_arr[i]]->ReleaseBuffer(buffer_index_arr[i]);

}

if (m_encoder->isEncoding()) {

if (!m_encoder->queueDirect(v4l2_buf)) {

std::cerr << "queueDirect err: " << std::endl;

break;

}

}

auto t1 = VideoUtils::GetHighResolutionTime();

VideoUtils::CalculateAndPrintFps(main_fps_stats, (t1 - camera_ts) * 1000);

}

return true;

}

};

MultiCameraStreamer *inst = nullptr;

void API_CALL on_mk_media_play(const mk_media_info url_info,

const mk_auth_invoker invoker,

const mk_sock_info sender) {

if (!inst) {

return;

}

std::string stream_name = mk_media_info_get_stream(url_info);

std::string stream_params = mk_media_info_get_params(url_info);

printf("on_mk_media_play:%s [%s]

", stream_name.c_str(),stream_params.c_str());

// 解析bitrate参数

int bitrate = 0;

size_t bitrate_pos = stream_params.find("bitrate=");

if (bitrate_pos != std::string::npos) {

size_t value_start = bitrate_pos + 8; // "bitrate=" 长度是8

size_t value_end = stream_params.find('&', value_start);

std::string bitrate_str;

if (value_end == std::string::npos) {

bitrate_str = stream_params.substr(value_start);

} else {

bitrate_str = stream_params.substr(value_start, value_end - value_start);

}

try {

bitrate = std::stoi(bitrate_str);

printf("bitrate: %d

", bitrate);

} catch (const std::exception &e) {

printf("bitrate参数解析失败: %s

", e.what());

return;

}

}

// 如果解析到有效的bitrate值,则设置比特率

if (bitrate > 0) {

inst->m_encoder->setBitrate(bitrate * 1024);

}

inst->m_encoder->forceIdrFrame();

mk_auth_invoker_do(invoker, NULL);

}

int main(int argc, char *argv[]) {

printf("BuildTime:%s %s

", __DATE__, __TIME__);

if (argc != 2) {

printf("Usage:%s device_type[W3,W7,67])

", argv[0]);

return -1;

}

mk_config config = {

.thread_num = 4,

.log_level = 0,

.log_mask = LOG_CONSOLE,

.log_file_path = NULL,

.log_file_days = 0,

.ini_is_path = 1,

.ini = "mk_mediakit.ini",

.ssl_is_path = 1,

.ssl = NULL,

.ssl_pwd = NULL,

};

mk_env_init(&config);

mk_rtsp_server_start(8554, 0);

mk_events events = {.on_mk_media_changed = NULL,

.on_mk_media_publish = NULL,

.on_mk_media_play = on_mk_media_play,

.on_mk_media_not_found = NULL,

.on_mk_media_no_reader = NULL,

.on_mk_http_request = NULL,

.on_mk_http_access = NULL,

.on_mk_http_before_access = NULL,

.on_mk_rtsp_get_realm = NULL,

.on_mk_rtsp_auth = NULL,

.on_mk_record_mp4 = NULL,

.on_mk_shell_login = NULL,

.on_mk_flow_report = NULL};

mk_events_listen(&events);

inst = new MultiCameraStreamer();

if(inst->Open(argv[1]))

{

inst->Run();

}

return 0;

}

EOF

2、生成主程序配置文件

cat > camera_cfg.json <<'EOF'

{

"global":{

"camera_bufers":2,

"encoder_buffers":2,

"use_hevc":false,

"fps":15,

"max_bitrate_mps":2,

"print_interval_sec":30

},

"layout":{

"left_front": {"x":0, "y":190, "width":432, "height":274},

"left_rear": {"x":0, "y":648, "width":584, "height":402},

"rear": {"x":668, "y":648, "width":584, "height":402},

"right_rear": {"x":1330, "y":648, "width":584, "height":402},

"right_front": {"x":1488, "y":190, "width":432, "height":274},

"front": {"x":444, "y":22, "width":1034, "height":612}

},

"DEV":{

"left_front": {"dev":"/dev/video1","width":1920,"height":1080},

"left_rear": {"dev":"/dev/video4","width":1920,"height":1080},

"rear": {"dev":"/dev/video3","width":1920,"height":1080},

"right_rear": {"dev":"/dev/video2","width":1920,"height":1080},

"right_front":{"dev":"/dev/video0","width":1920,"height":1080},

"front": {"dev":"/dev/video7","width":1920,"height":1080}

}

}

EOF

3、生成RTSP服务器配置文件

cat > mk_mediakit.ini <<'EOF'

[general]

mergeWriteMS=0

enableVhost=0

[protocol]

enable_audio=0

enable_hls=0

enable_hls_fmp4=0

enable_mp4=0

enable_rtmp=0

enable_ts=0

enable_fmp4=0

[rtp_proxy]

lowLatency=1

gop_cache=1

[rtp]

lowLatency=1

tcp_send_buffer_size = 0

max_rtp_check_delay_ms = 500

h264_stap_a=1

videoMtuSize=1400

[rtsp]

directProxy=0

lowLatency=1

tcp_send_buffer_size = 0

max_rtp_check_delay_ms = 500

EOF

4、编译

g++ -o l4t_media_srv l4t_media_srv.cpp

-std=c++17 -I ../3rdparty/include

-I/usr/src/jetson_multimedia_api/include

-I /usr/local/cuda/include -I /usr/include/jsoncpp -I /opt/apollo/neo/include

/usr/src/jetson_multimedia_api/samples/common/classes/NvVideoEncoder.cpp

/usr/src/jetson_multimedia_api/samples/common/classes/NvLogging.cpp

/usr/src/jetson_multimedia_api/samples/common/classes/NvV4l2Element*.cpp

/usr/src/jetson_multimedia_api/samples/common/classes/NvElement*.cpp

/usr/src/jetson_multimedia_api/samples/common/classes/NvBufSurface.cpp

/usr/src/jetson_multimedia_api/samples/common/classes/NvBuffer.cpp

-L /usr/local/cuda/lib64 -L/usr/lib/aarch64-linux-gnu/tegra -L ../3rdparty/lib

-lcudart -lv4l2 -lpthread -lnvbufsurface -lnvbufsurftransform -lnvv4l2 -lmk_api -ljsoncpp

-Wl,-rpath=../3rdparty/lib

5、运行

./l4t_media_srv DEV

暂无评论内容